Setup of SharePoint 2013 High-Trust On-premise Add-In Developer / Production environment

Set-up the remote IIS site

-This is the site the add-in will connect with for data and / or interactivity-No SharePoint components are necessary aside from the web project being deployed as an asp.net site.

-Authentication is managed via certificates (cer/pfx)

Step 1 – enable IIS services including https, certificates and management services

Step 2 – Install the certificate for the site

-This can be a self-signed domain certificate (issued from your development farm Certificate Authority) or from a Certificate Vendor. The certificate should include all certificates in the chain and if issued from the local CA, needs to have the CA certificate in the Local -> Trusted Root Authorities location)Import the certificate into IIS on the remote web server with these steps:

- In IIS Manager, select the ServerName node in the tree view on the left.

- Double-click the Server Certificates icon.

- Select Import in the Actions pane on the right.

- On the Import Certificate dialog, use the browse button to browse to the .pfx file, and then enter the password of the certificate.

- If you are using IIS Manager 8, there is a Select Certificate Store drop down. Choose Personal. (This refers to the "personal" certificate storage of the computer, not the user.)

- If you don't already have a cer version, or you do but it includes the private key, enable Allow this certificate to be exported.

- Click OK

- Open MMC (Start -> mmc) add the local certificate snap-in

- Navigate to Certificates (Local Computer) -> Personal -> Certificates

- Double-click the certificate added above and then open the Details tab

- Select the Serial Number field to make the entire serial number is visible in the box.

- Copy the serial number (ctrl+C) to a text file – remove all spaces (including the lead and trailing spaces)

- Copy the Authority Key Identifier value to the text file, remove spaces and convert to GUID format (xxxxxxxx-xxxx-xxxx-xxxxxxxxxxx)

- Save the text file to a SharePoint Server accessible location (i.e. network share)

- Copy the pfx certificate to the same location

Step 3 - create a cer version of the certificate

- This contains the public key of the remote web server and is used by SharePoint to encrypt requests from the remote web application and validate the access tokens in those requests. It is created on the remote web server and then moved to the SharePoint farm.1. In IIS manager, select the ServerName node in the tree view on the left.

2. Double-click Server Certificates.

3. In Server Certificates view, double-click the certificate to display the certificate details.

4. On the Details tab, choose Copy to File to launch the Certificate Export Wizard, and then choose Next.

5. Use the default value No, do not export the private key, and then choose Next.

6. Use the default values on the next page. Choose Next.

7. Choose Browse and browse to the folder the serial text file was saved to above.

8. Choose Next.

9. Choose Finish.

Step 4 – Create an IIS site to use 443 / SSL and the certificate created

- In IIS Manager, right-click the Sites folder and select Add Website

- Give the site a meaningful name (no spaces or special characters)

- Select a location accessible to IIS processes and the app pool user (I use a new subdirectory of intepub as it inherits permissions necessary)

- Under Bindings, select HTTPS in the Type drop down list.

- Select All Unassigned in the IP address drop down list or specify the IP address if desired.

- Enter the port in the Port text box. If you specify a port other than 443, when you registered the SharePoint Add-in on appregnew.aspx then you have to use the same number there.

- In the Host Name, put in the URL name used (i.e. mysub.mysite.com) and check Require SNI

- In the SSL certificate drop down list, select the certificate that you used above.

- Click OK.

- Click Close.

You may get a warning, if so select the Default Web Site and click on bindings in the right menu. Make sure the https bindings for IP address ‘All Unassigned’ are set and bound to a star certificate (default for the server). Also make sure this binding does not require SNI.

Step 5 - configure authentication for the web application

When a new web application is installed in IIS, it is initially configured for anonymous access, but almost all high-trust SharePoint Add-in are designed to require authentication of users, so you need to change it. In IIS Manager, highlight the web application in the Connections pane. It will be either a peer website of the Default Web Site or a child of the Default Web Site.- Double-click the Authentication icon in the center pane to open the Authentication pane.

- Highlight Anonymous Authentication and then click Disable in the Actions pane.

- Highlight the authentication system that the web application is designed to use and click Enable in the Actions pane.

- If the web application's code uses the generated code in the TokenHelper and SharePointContext files without modifications to the user authentication parts of the files, then the web application is using Windows Authentication, so that is the option you should enable.

- If you are using the generated code files without modifications to the user authentication parts of the files, you also need to configure the authentication provider with the following steps:

- Highlight Windows Authentication in the Authentication pane.

- Click Providers.

- In the Providers dialog, ensure that NTLM is listed above Negotiate.

- Click OK.

Step 6 – Enable App Pool profile loading

Not entirely necessary in all situations, this does head off an issue I’ve encountered repeatedly.- Select Application Pools in the left menu, highlight the app pool use by the web application created in step 4, select Advanced Settings in the right hand menu.

- Scroll down to the Load User Profile and set to True (also verify the App pool account is the desired account for the application)

NOTE

IF the SharePoint Site does not use a purchased certificate from a certificate vendor in the Trusted Certificate Store the app will authenticate to a point and return the result ‘The remote certificate is invalid according to the validation procedure’. Adding the certificate and root chain to the Trusted Root Certificate Store has mixed results and for this reason it is recommended that the SharePoint site use a purchased certificate from a trusted vendor.Restart the IIS site.

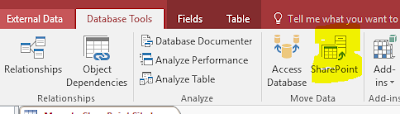

Setup the SharePoint Server to use the Add-in

Configure SharePoint to use the certificate

The procedures in this section can be performed on any SharePoint server on which the SharePoint Management Shell is installed.- Create a folder and be sure that the add-in pool identities for the following IIS add-in pools have Read right to it:

- SecurityTokenServiceApplicationPool

- The add-in pool that serves the IIS web site that hosts the parent SharePoint web application for your test SharePoint website.

I simply add ‘everyone’ to the folder and give full access to this user. The folder is deleted after this process is completed anyway so not a big deal in a dev environment. - Move (cut -> paste) the .cer file and the cert serial text file from the remote web server to the folder you just created on the SharePoint server.

- The following procedure configures the certificate as a trusted token issuer in SharePoint. It is performed just once (for each high-trust SharePoint Add-in).

- Open the SharePoint Management Shell as an administrator and run the following script:

Add-PSSnapin Microsoft.SharePoint.PowerShell

cls

$rootAuthName = "rootauthname"

# a unique and meaningful name

$tokenIssuerName = "tokenissuername"

# a unique and meaningful name

$publicCertPath = “C:\path\to\certs\cert.cer”

# the path to the certificate created above

$certAuthorityKeyID = "GUID"

# obtained from the certificate properties Authority Key

Identifier (Step 3)

$specificIssuerId = $certAuthorityKeyID.ToLower()

$certificate = New-Object System.Security.Cryptography.X509Certificates.X509Certificate2($publicCertPath)

New-SPTrustedRootAuthority -Name $rootAuthName

-Certificate $certificate

$realm = Get-SPAuthenticationRealm

$fullIssuerIdentifier = $specificIssuerId

+ “@” + $realm

New-SPTrustedSecurityTokenIssuer -Name $tokenIssuerName

-Certificate $certificate

–RegisteredIssuerName $fullIssuerIdentifier

–IsTrustBroker

iisreset

In the event something goes awry, the following will remove the two objects created by this script:

Get-SPTrustedRootAuthority $rootAuthName

Remove-SPTrustedRootAuthority $rootAuthName

Get-SPTrustedSecurityTokenIssuer $tokenIssuerName

Remove-SPTrustedSecurityTokenIssuer $tokenIssuerName

Delete the cer file from the file system of the SharePoint server.

Restart IIS (the server can take up to 24 hours to recognize these objects otherwise)

Register a new Add-In in SharePoint

Open your web application in a browser.Navigate to <site root>/_layouts/15/appregnew.aspx

Generate an App ID, generate an App Secret, give it a display name (Title), enter the app domain (the iis address used above). Leave the redirect URI blank.

Copy the App Id to a text file and save. This is used wherever Client ID is referenced later!

Create the Add-In Project in Visual Studio

Fire up Visual Studio. (I’m using 2015 community edition in this example)Create a new project using the SharePoint Add-in template

- Select the local SharePoint site to use for debugging, select ‘provider-hosted’, click next.

- Select SharePoint 2013, click next.

- Pick a web forms or MVC application type (I take the Web forms version)

- Select ‘Use a certificate …’, browse to the location of the certificate pfx file used in step 2, enter the password for the pfx.

- In the Issuer ID enter the value generated in the register the add-in step above (the App ID)

- Click Finish.

- View the App.manifest in code

- Paste in the Issuer ID (App ID) in the ClientId value

- Save and close. Open in design view.

- Change the Start Page to reflect the location of the remote web application:

- On the permissions tab, grant the add-in permissions needed (for this example I’m giving the add-in full control to the web)

- Open the Web.config in the Web Project

- In the System.web section, add the following key to enable meaningful error messages:

- In the appSettings section fill in the ClientId and IssuerId with the App Id generated in AppRegNew above, ClientSigningCertificateSerialNumber: (You will need to add this key) This is the serial number of the certificate from the text file created in step 3. There should be no spaces or hyphens in the value.

<appSettings>

<add

key="ClientID" value="guid"

/>

<add

key="ClientSigningCertificateSerialNumber"

value="serial" />

<add

key="IssuerId" value="guid"

/>

</appSettings>

Modify the TokenHelper file

The TokenHelper.cs (or .vb) file generated by Office Developer Tools for Visual Studio needs to be modified to work with the certificate stored in the Windows Certificate Store and to retrieve it by its serial number.- Near the bottom of the #region private fields part of the file are declarations for ClientSigningCertificatePath, ClientSigningCertificatePassword, and ClientCertificate. Remove all three.

- In their place, add the following line:

private static readonly string ClientSigningCertificateSerialNumber = WebConfigurationManager.AppSettings.Get("ClientSigningCertificateSerialNumber");

- Find the line that declares the SigningCredentials field. Replace it with the following line:

private static readonly X509SigningCredentials SigningCredentials = GetSigningCredentials(GetCertificateFromStore());

- Go to the #region private methods part of the file and add the following two methods:

private static X509SigningCredentials GetSigningCredentials(X509Certificate2 cert)

{

return (cert == null) ? null

: new X509SigningCredentials(cert,

SecurityAlgorithms.RsaSha256Signature,

SecurityAlgorithms.Sha256Digest);

}

private static X509Certificate2 GetCertificateFromStore()

{

if (string.IsNullOrEmpty(ClientSigningCertificateSerialNumber))

{

return null;

}

// Get the machine's personal store

X509Certificate2 storedCert;

X509Store store = new X509Store(StoreName.My, StoreLocation.LocalMachine);

try

{

// Open for read-only access

store.Open(OpenFlags.ReadOnly);

// Find the cert

storedCert = store.Certificates.Find(X509FindType.FindBySerialNumber,

ClientSigningCertificateSerialNumber,

true)

.OfType<X509Certificate2>().SingleOrDefault();

}

finally

{

store.Close();

}

return storedCert;

}

Package the remote web application

- In Solution Explorer, right-click the web application project (not the SharePoint Add-in project), and select Publish.

- On the Profile tab, select New Profile on the drop-down list.

- When prompted, give the profile an appropriate name.

- On the Connection tab, select Web Deploy Package in the Publish method drop-down list.

- For Package location, use any folder. To simplify later procedures, this should be an empty folder. The subfolder of the bin folder of the project is typically used.

- For the site name, enter the name of the IIS website that will host the web application. Do not include protocol or port or slashes in the name; for example, "PayrollSite." If you want the web application to be a child of the Default Web Site, use Default Web Site/<website name>; for example, "Default Web Site/PayrollSite." (If the IIS website does not already exist, it is created when you execute the Web Deploy package in a later procedure.)

- Click Next.

- On the Settings tab select either Release or Debug on the Configuration drop down.

- Click Next and then Publish. A zip file and various other files that will be used in to install the web application in a later procedure are created in the package location

To create a SharePoint Add-in package

- Right-click the SharePoint Add-in project in your solution, and then choose Publish.

- In the Current profile drop-down, select the profile that you created in the last procedure.

- If a small yellow warning symbol appears next to the Edit button, click the Edit button. A form opens asking for the same information that you included in the web.config file. This information is not required since you are using the Web Deploy Package publishing method, but you cannot leave the form blank. Enter any characters in the four text boxes and click Finish.

- Click the Package the add-in button. (Do not click Deploy your web project. This button simply repeats what you did in the final step of the last procedure.) A Package the add-in form opens.

- In the Where is your website hosted? text box, enter the URL of the domain of the remote web application. You must include the protocol, HTTPS, and if the port that the web application will listen for HTTPS requests is not 443, then you must include the port as well; for example, https://MyServer:4444. (This is the value that Office Developer Tools for Visual Studio uses to replace the ~remoteAppUrl token in the add-in manifest for the SharePoint Add-in.)

- In the What is the add-in's Client ID? text box, enter the client ID that was generated on the appregnew.aspx page, and which you also entered in the web.config file.

- Click Finish. Your add-in package is created.